Discrete Multivariate R.V.s

Definition (Joint CDF) The Joint CDf of r.v.s $X$ and $Y$ is the function $F_{X,Y}$ given by

Definition (Joint PMF) The Joint PMF of discrete r.v.s $X$ and $Y$ is the function $p_{X,Y}$ given by

Definition (Marginal PMF) For discrete r.v.s $X$ and $Y$, Marginal PMF of $X$ is

Definition (Conditional PMF) For discrete r.v.s $X$ and $Y$, the Conditional PMF of $X$ given $Y=y$ is

Definition (Independence of Discrete R.V.s) Random variables $X$ and $Y$ are independent if for all x and y

for all x and y also equivalent to the condition

Continuous Multivariate R.V.s

Definition (Joint PDF) If $X$ and $Y$ are continuous with joint CDF $F_{X,Y}$ then

Definition (Marginal PDF) If $X$ and $Y$ are continuous with joint PDF $f_{X,Y}$ then

Definition (Conditional PDF) For continuous r.v.s. $X$ and $Y$ with joint PDF $f_{X,Y}$ the Conditional PDF of $Y$ given $X=x$ is

Definition (Independence of Continuous R.V.s) Random variables $X$ and $Y$ are independent if for all x and y

If $X$ and $Y$ are continuous with joint PDF $f_{X,Y}$

Theorem (2D LOTUS) Let g be a function from $R^2$ to $R$

If $X$ and $Y$ are discrete

If $X$ and $Y$ are continuous

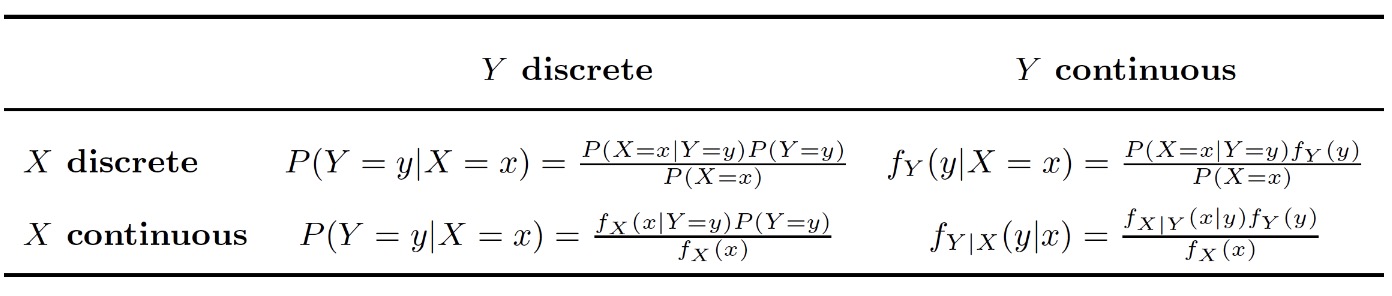

General Bayes’ Rule

Convariance and Correlation

Covariance

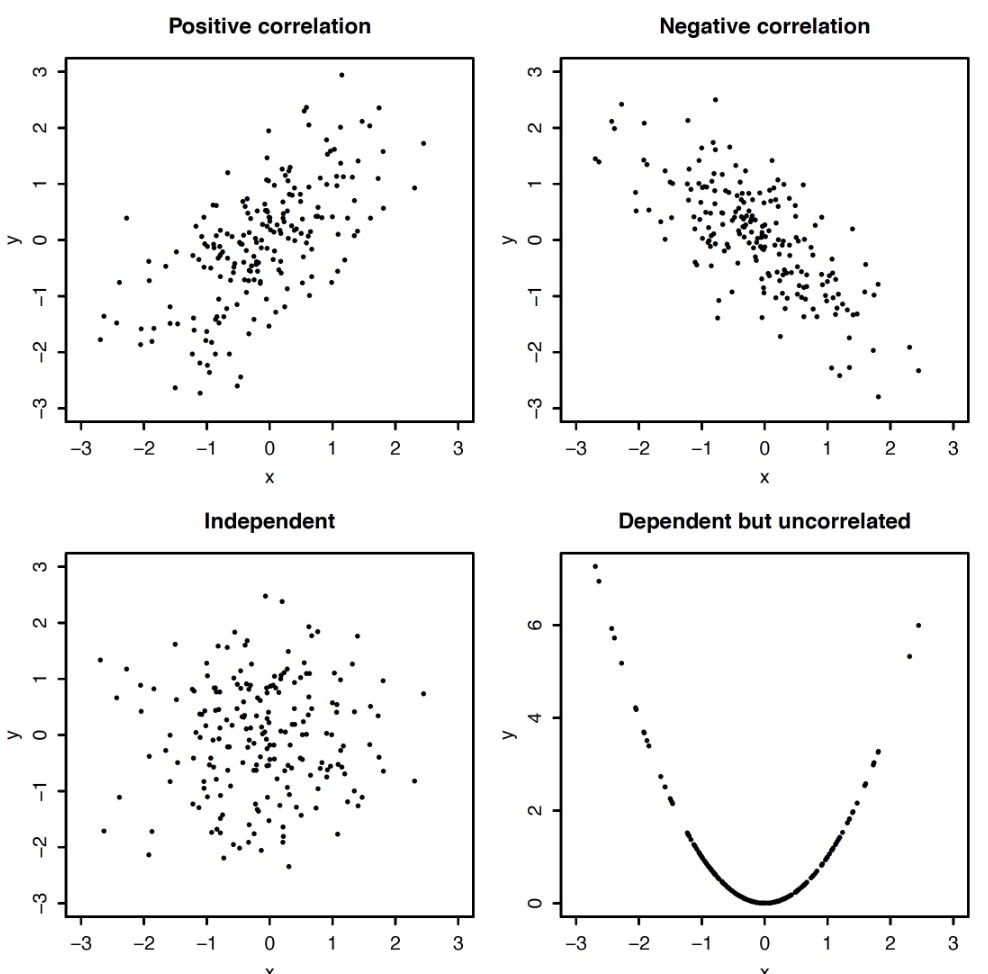

- Measure a tendency of two r.v.s $X\&Y$ to go up or down together

- Positive Covariance: $X$ go up, $Y$ tends go up

- Negative Covariance: $X$ go up, $Y$ tends go down

Definition (Covariance) The covariance between r.v.s $X$ and $Y$ is

Theorem (Uncorrelated) If $X$ and $Y$ are independent, then they are Uncorrelated($Cov(X,Y)=0$)

Properties of Covariance

- $Cov(X,X) = Var(X)$

- $Cov(X,Y) = Cov(Y,X)$

- $Cov(X,c) = 0$

- $Cov(a\cdot X,Y) = a\cdot Cov(X,Y)$

- $Cov(X+Y,Z) = Cov(X,Z)+Cov(Y,Z)$

- $Cov(X+Y,W+Z) = Cov(X,Z)+Cov(X,W)+Cov(Y,Z)+Cov(Y,W)$

- $Var(X+Y) = Var(X)+Var(Y) + 2Cov(X,Y)$

- For n r.v.s $X_1,\dotsb ,X_n$

Definition (Correlation) The Correlation between r.v.s $X$ and $Y$ is

Shifting and Scaling $X$ and $Y$ has no effect on correlation

Theorem (Correlation Bounds) For any r.v.s $X$ and $Y$

Change of Variables

Theorem (Change of Variables in One Dimension) Let $X$ be a continuous r.v. with PDF $f_X$, and let $Y = g(X)$, where $g$ is differentiable and strictly increasing. Then the PDF of $Y$ is given by

where $x = g^{-1}(y)$

Proof:

Then result obtained By the chain rule

Theorem (Change of Variables) Let $X = (X_1,…,X_n)$ be a continuous random vector with joint PDF $f_X(x)$ and $Y=g(X)$, $g$ is an invertible function from $R^n$ to $R^n$ then $\frac{\partial \mathbf{x}}{\partial \mathbf{y}}$ form a Jacobian matrix

Then the joint PDF of $Y$ is

Convolutions

Theorem (Convolution Sums and Integrals)

If $X$ and $Y$ are independent discrete r.v.s, then the PMF of their sum $T = X+Y$ is

If $X$ and $Y$ are independent continuous r.v.s, then the PMF of their sum $T = X+Y$ is

Order Statistics

Definition (Order Statistics) For r.v.s $X_1,X_2,…,X_n$ the order statistics sre the random variables $X_{(1)},…,X_{(2)}$, where

- $X_{(1)} = min (X_1,…,X_n)$

- $X_{(2)}$ is the $2^{nd}$ of $X_1,…,X_n$

- $\vdots$

- $X_{n} = max(X_1,…,X_n)$

The order statistics are dependent, for example , if $X_{(1)} = 100$, then $X_{(n)}$ is forced to be $\geq 100$

We foucs on the case $X_1,…,X_n$ are i.i.d continuous r.v.s, with CDF $F$ and PDF $f$

Theorem (CDF of Order Statistics) Let $X_1,…,X_n$ be i.i.d continuous r.v.s with CDF F, Then the CDF of the $j^{th}$ order statistic $X_{(j)}$ is

Proof:

Let’s start with a specical case when $j=n, X_{(n)}=max(X_1,…,X_n)$:

Then, consider another special case when $j=1, X_{(1)} = min(X_1,…,X_n)$:

The result here can be rewrite as $\sum_{k=1}^n\left( \begin{array}{c} n\\k \end{array} \right) F(x)^k (1-F(x))^{n-k}$

This result can be obtained by expand $[F(x) + 1 -F(x)]^n$

Finally, let’s consider more general case where $1<j<n, X_{(j)}\leq x$, this means at least $j$ of $\{X_i \}$ fall to the left of $x$

Denote $N$ as the nunber of $X_i$ landing to the left of $x$. $X_i$ lands to the left of $x$ w.p. $P(X_i\leq x) = F(x)$. Then $N\sim Bin(n,F(x))$

Theorem (PDF of Order Statistic) Let $X_1,…,X_n$ be i.i.d. continuous r.v.s with CDF $F$ and PDF $f$. Then the marginal PDF of $j^{th}$ order statistic $X_{(j)}$ is

Theorem (Joint PDF) Let $X_1,…,X_n$ be i.i.d. continuous r.v.s with PDF $f$, Then the joint PDF of all order statistics is

Example 1(Order Statistics of Uniforms) $U_1,U_2,…,U_n$ are i.i.d. $Unif(0,1)$ r.v.s with CDF F and PDF $f$

For $0\leq x\leq 1$ ,$f(x) = 1$ , $F(x) = x$, Then